When my students log-in to their course LMS this semester, they will encounter this warning:

This course is an AI-Free Zone: Because I am interested in what you think and in your own learning—including learning to communicate effectively in writing—the use of generative AI tools, such as ChatGPT, Claude, Copilot, Gemini, Grammarly Pro, etc., is strictly forbidden in this class.

- All written assignments for this course must be your own work presented in your own words.

- Students must be able to talk cogently about their written assignments and their research process. At the professor’s discretion, an oral interview may be required before a grade is assigned for any assignment. Failure to participate in the interview process will result in a 0 on the assignment.

(For good measure, the same statement appears on my syllabi.)

I am not, I think, naïve. I don’t imagine perfect compliance or that I will even be able to tell each time AI is used. I am also aware that this is not a structural change to course assessment: Rather than materially altering my assignments, I am relying on students to choose to follow my class rules instead of turning to the low-hanging fruit that is ChatGPT, Microsoft Copilot and other Large Language Models that masquerade as “artificial intelligence.”

But here’s the thing: AI-bots are very good at producing the kind of introductory thinking-piece essay that teachers have used for generations. The point in these kinds of assignments is not for students to make a new contribution to knowledge, but to learn as they think through classic intellectual problems on their own. These assignments have been used for so long because they work—or they did work until AI-bots came along. All of a sudden we have to look for alternatives to traditional but effective ways of helping students learn (including summarizing, analyzing, synthesizing, etc.). We may eventually come up with workarounds as good or better than our current approaches to teaching, but we aren’t there yet.

So instead of totally changing the way I teach—and in the process abandoning effective ways of helping students learn—I will try once more to persuade my students that turning to AI to complete assignments in my courses will only hurt themselves.

Here is one more reason why:

With great texts, the reading is its own reward. No potted summary of Plato’s Republic or Homer’s Odyssey will substitute for the real thing. These rich texts can’t be absorbed on a single reading; give them years of attention and they will still have more to say. As a teacher in the Humanities, a major part of my job is helping students learn to read. This includes reading and thinking about difficult texts. Again, it is the struggle that matters for learning and thinking. And so a Cole's notes version or an AI summary will not help students learn how to summarize or synthesize, and it will not get the texts inside them in a way that shapes their lives.

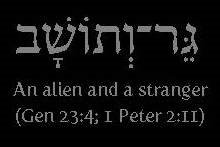

What is true about Plato's Republic is even more true about the Bible, a collection of texts whose meaning and implications cannot be exhausted. As a Christian educator, one of my primary aims is to help people connect with the text of Scripture. The point is not so much the right answer or correct prose, but getting the text inside you deep enough that it can do its inspired work.

Thankfully, in my confessional setting students tend to be open to this kind of argument. Some will continue to cheat themselves (and their professor). My role is not primarily to police the boundaries, but to try and create conditions that will motivate and enhance learning. Wish me luck.

Earlier posts on Education and AI:

No comments:

Post a Comment